With each passing day, software is becoming more and more embedded in everyday systems that are used by millions if not billions of people around the world. From smart watches that can detect arrhythmia, to autonomous vehicles, surgeons practicing procedures in the metaverse, and smart phones that can connect to satellite constellations; the use cases and purposes of technologies that were previously used for leisure are changing.

The embedded software market is growing and as we move into the age of automation and digitalization of processes that were previously manual, we see that technology is slowly becoming more responsible for every aspect of our safety.

Everyone working in tech needs to be prepared for this paradigm shift. In an age when everything is connected, we are reaching a point where no technology will be able to exist without needing to consider it’s implications on safety, whether that’s physical, financial or otherwise. This will bring with it a sea of changes, including new certifications, regulations and new ways of developing technologies that users can trust and rely upon.

How can we define something as ‘safe’?

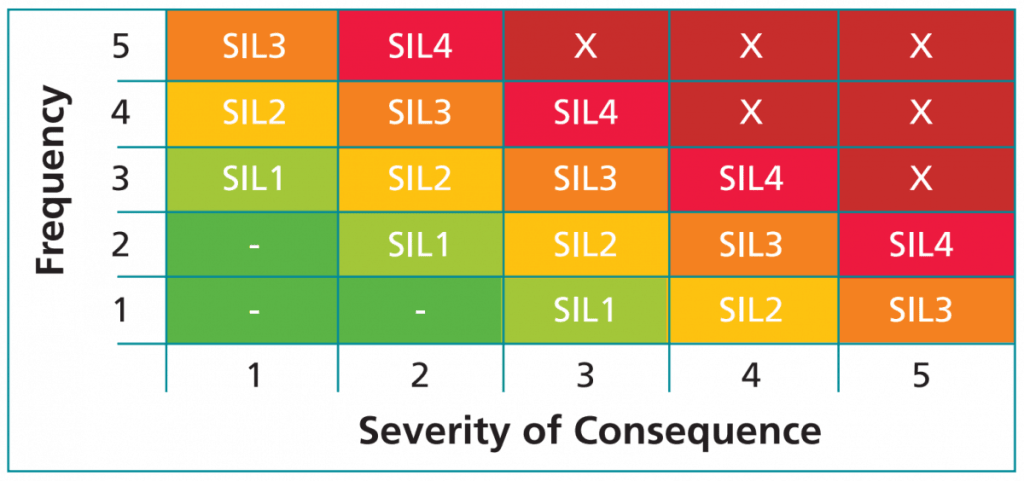

My background is in the safety critical software domain, and I think the rest of the tech industry can learn a lot from this area. Safety Critical projects tend to be categorised using Safety Integrity Levels (SILs). The higher the SIL, the more risk is associated with the activity. Thus, the higher the SIL, the more rigorous the verification and validation activities will be in order to minimise the probability of a system failure.

Systems, including software, hardware or both, can be certified as being in one of these SILs, and we can use this certification as a start point for defining what ‘safe’ looks like. Certification plays a key role in developing safety critical software as it can provide assurance of the standard and quality of the produced software. I believe emerging industries like the metaverse will need to introduce stronger regulatory certification requirements related to safety because this will help users and investors feel assured around the technologies safety and quality. Certification is not the only thing to be considered, but it’s a good first step to take.

How do we create safe software?

There are a huge number of technologies out there that developers, testers and engineers can use to develop reliable, high quality software. I won’t go into detail in this blog, but some examples from the safety critical world include SPARK, RUST (and most recently Ferrocene), and the Contestor method of verification. This is of course in addition to going through the usual processes of static and dynamic certification and validation activities. It may not be surprising to know that test activities are vital in developing software we can trust! Quality assurance activities are also critical in this space – we need to remove as much doubt as we can about the reliability of our products.

The tools and processes are only part of the whole picture. Whilst they can assist with making our software reliable and trustworthy in a much shorter timescale, and have huge benefits when it comes to proving

performance for certification reasons, these tools are no where near as useful if they aren’t used with the right intentions and in the right culture. They are just part of a wider ecosystem.

I want to leave you with two things I think everyone working in tech should be thinking about as we move into a time of needing to develop tech we can trust.

1. Trust comes from quality.

Quality must be at the heart of everything we do and be our top priority. Great ways to do this include starting testing early, having testers work with developers to perform exploratory exercises to identify edge cases, and having everyone on the team understand the importance of quality. Not only testers should have a curious, testing mindset!

2. Diversity is vital.

Diversity of thought allows us to think about situations in new ways – people with different backgrounds may identify different gaps in the requirements or test cases based on their experiences. Having a diverse group of people working on critical systems that need to be trusted not only means we identify potential issues faster (because of the varied experiences we all bring) which makes development costs cheaper, it also gives us more assurance that our software is of a high quality to everyone who uses it.

In summary, yes we can build tech we can trust. But only if we have the right environment to do so, which includes having access to the right tools, having the right culture in your work, and the right people in your team.

Leave a comment